Microsoft detects Apple as spam?

Imagine yourself working at Microsoft 15 years ago, where Windows is the dominant platform and Apple’s computer marketshare is miniscule.

Now imagine you’re working on a spam filter with the best of intentions, and yet your spam filter starts detecting as spam any email sent from a well-known email program on Mac computers. This makes it look like you’re maliciously blocking all email from a competitor.

This is a real problem from the lore of a team I worked on at Microsoft for over a decade.

If one prolific spammer writes his emails in a program on a Mac computer and sends them to millions of people, far outnumbering the legitimate emails sent from that same program, it is very easy for a machine learning model to simply learn that all emails from that program (or even platform) are spam.

Algorithmic bias more generally

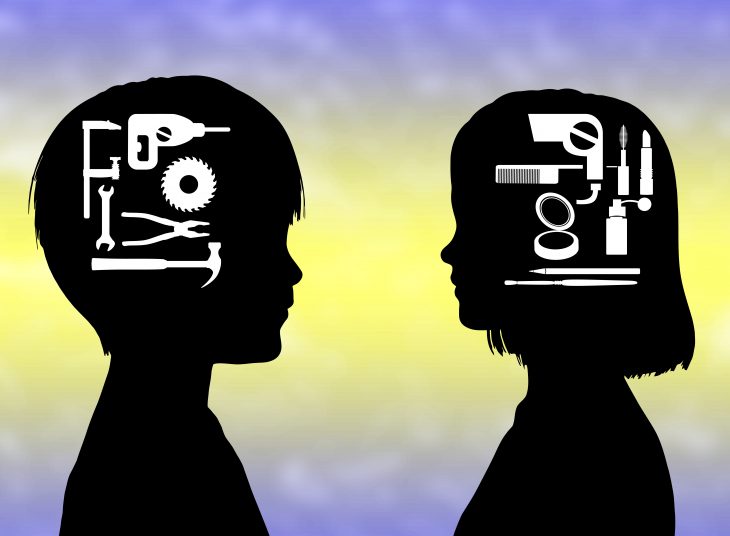

Today the examples of algorithmic bias that get all the attention typically focus on gender bias, which is another serious example of this issue. But this problem is much bigger, and much older.

The focus on the gender bias issue when discussing algorithmic bias is more a reflection of the current cultural focus on gender bias than it is a reflection of where algorithms most commonly go wrong.

If most mail coming from Macs is spam, an algorithm could mistakenly learn to identify mail from a Mac as spam. If most instances of doctors in text online are men, models will tend to reinforce this bias as well. This is the exact same problem.

Algorithms generalize differently than humans

Machine learning models learn statistical correlations, not causal explanations. They infer patterns in the data we provide them, and use these patterns to make decisions like what posts you see in social media or which emails make it through to your inbox.

Humans can also act on shallow correlations as well, as evidenced by the research on unconscious bias, which is notably worst in cases where people are not paying full attention or are multi-tasking.

In most cases, however, humans put the bits of information they have together and make inferences about the underlying causes of the events, the motives of the people involved, etc. When a human makes an important decision, it is typically informed by a causal perspective.

Algorithms make dumb mistakes

The machine learning algorithms that are changing the world today are the output of research and engineering efforts by very intelligent individuals with strong mathematical backgrounds. This does not mean that the algorithms are smart.

Artificial intelligence gives the illusion of intelligence, and these systems are in charge of many decisions that affect our daily lives. This includes things like the book recommendations we see on Amazon and the Facebook posts that show up in our News Feed.

It’s common for people to think by analogy, and to compare a human making a decision to a computer making a decision. These are very different things; the former is a deliberate act, and the latter an automatic process that is many steps removed from the deliberate acts that created the algorithm.

An algorithm looking for patterns can pick up spurious patterns or real ones. And if they’re real, they may still be based on superficial characteristics that aren’t fundamental to the distinction being made.

This is the source of bias in algorithms that we’re experiencing today. It’s a deep problem that isn’t going to go away as long as computers are dumb and we need fancy mathematical tricks to provide the illusion of intelligence.

Even when the algorithms are created by smart people, they still make dumb mistakes. And they will continue to do so for the foreseeable future, since algorithms operate at a surface-level.

Since this is going to be around for a while, we need ways to deal with it.

Jumping to solutions

I’ve just described a broad problem that needs a solution. Like the narrower problems discussed typically revolving around gender bias, the solutions proposed are also narrow.

A recent research paper on mitigating unwanted biases in machine learning models focuses entirely on bias with respect to demographic characteristics of people.

The amount of research focused specifically on mitigating gender bias in language models is so extensive that a survey of solutions in this area was accepted into the top conference in the field.

This is indeed a serious problem worth studying in-depth, and those of us working in the field should take this research seriously and act to mitigate bias to the extent this research makes it possible.

Nonetheless, this approach is narrow and misses the broader issue for real-world systems, where gender bias is but one of a multitude of types of bias a model could easily pick up on.

In real-world applications where machine learning models are used, whether language-based or not, this issue of bias is one that needs to be addressed.

Looking for the problem

For real-world systems people are building today, I’m not aware of any automatic method to detecting bias that can reliably detect even most forms of bias.

We are far from a solution to this problem, and the first step should be looking for the problem itself in new models that are developed or existing models being used in products.

If this can’t be done automatically, this means having a human look at what the system is doing with the purpose of identifying concerning biases. Steps can be taken to look for existing well-known biases, such as gender bias, but no list of possible biases will ever be complete and nothing substitutes for human involvement.

Even working on a spam filter at Microsoft, it would never have occurred to me to look at whether or not we were filtering out all email from Apple. Or any other competitor.

What’s so pernicious about this problem is that the things the algorithms pick up on in cases of bias are non-essential to the task at hand.

It is not natural or feasible for a person building such a model to think through all the non-essentials that could potentially get misunderstood and lead to bias. It is even less possible to check for each of these one-by-one.

The most critical way to measure and mitigate bias is to have humans look at a portion of the decisions being made in the real world and look for signs of bias. When found, the underlying causes of the problems can be identified and mitigated, but they first have to be identified.

Privacy

I just said humans should look at data to mitigate bias. This is at odds with privacy concerns.

Even though I know many people who care about privacy, and also many people who care about reducing algorithmic bias, I haven’t personally heard a single person note the fact that these two issues are related in this unfortunate manner.

It’s certainly possible to use automated means to look for known or suspected biases without manually looking at data, and this is helpful.

It’s possible to use external datasets to look for bias without looking at sensitive user data, and this can help, although only to the extent the two datasets are similar.

If you want to detect unexpected biases, and to do so in the context a real-world model is actually being used, there’s no substitute for looking at small samples of real user data. Identifying bias—and thus remediating it—is inextricably linked to activities that pose privacy concerns.

Finding a balanced approach

This is a tricky trade-off, and there are many ways this problem can be approached.

A team can be very respectful of privacy and still reduce even unknown types of bias by looking at examples of how it performs on public datasets to identify as many biases as possible without privacy concerns.

This team could also invest in automated approaches to detect known types of bias on the private data, so a human can spot the bias by looking at aggregated statistics rather than raw data. Some bias will still go undetected, but this is one reasonable and responsible approach that balances these tricky issues.

Alternatively, a team could build a system that lets users opt into a program where they allow a very small, random portion of their data to be looked at by a human to reduce bias.

This requires building controls to let users exclude specific sensitive data they want to keep private, and it still runs the risk of missing bias due to having a non-representative sample to work with. Nonetheless, this is another responsible approach at a slightly different spot on the bias-privacy spectrum of trade-offs.

Of course more extreme options are also possible. Fortunately, there are ways to make progress in these areas without abandoning privacy and without letting bias go unchecked.

[…] I discussed in a previous post, Privacy or Algorithm Bias? Pick One, bias in algorithms extends far beyond genders, and even other demographic characteristics like […]

[…] The most relevant similarity is that both operate on input data that is treated as a given. In the case of human perception, the input data reflects real information from the world; for an artificial intelligence algorithm, getting accurate and representative data is one of the hardest challenges (see Privacy or Algorithm Bias? Pick One.). […]