On average, every Internet user has been the victim of multiple cybercrimes. It’s an enormous problem, and cybercriminals are rarely prosecuted.

Artificial intelligence is the most important tool we have to fight cybercrime.

The threats are always changing. Today we have Russian bots influencing US presidential elections, credit cards stolen in massive data breaches, fake news stories, and innumerable other challenges.

The older problems haven’t gone away. We still have websites trying to steal our passwords, computer viruses, and the other challenges that have been around for decades. While still around, some of these have been mitigated over time.

We can use email spam as an example to show how a large-scale abuse problem can be mitigated with artificial intelligence. This will also highlight how, even after decades of work, artificial intelligence is a useful tool but can’t fully solve the problem.

The spam example

Gary Theurk sent the first spam email in 1978, and spam became a substantial problem as grew into a thriving business for spammers in the decades that followed.

In 2002, Paul Graham famously provided A Plan for Spam, where he proposed an artificial intelligence-based approach to build an effective spam filter. While this particular approach turned out to be relatively ineffective, the basic idea of using artificial intelligence was indeed effective when the problem was framed the right way.

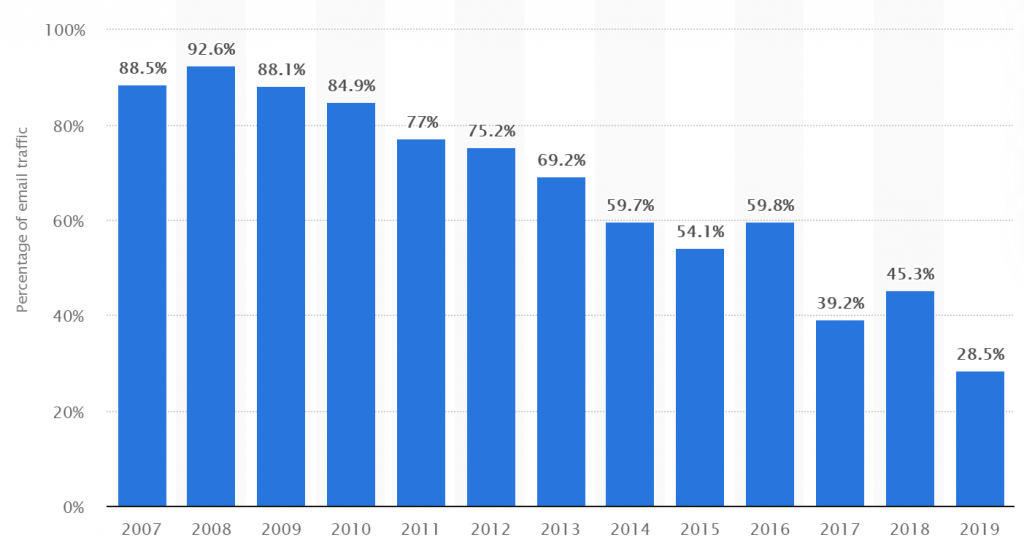

This took a while. At its peak, spam represented 93% of all email on the Internet. In 2008, however, other AI-based approaches finally enabled us to turn the corner on spam, which has been in decline ever since.

Content filtering with AI doesn’t work

Filtering content is currently a hot topic in the social media realm as Facebook and Twitter combat challenges like fake news, medical misinformation, and others.

The first AI-based approach to content filtering was spam filtering, and this approach was a failure. Facebook and Google employ tens of thousands of content moderators to serve as a backstop for the algorithms since they can’t solve the problem on their own.

The biggest problem with content filtering when there’s an adversary—like a spammer—is that the adversary can easily work around getting detected by such a filter.

An effective filter needs to detect most of the bad content without detecting much legitimate content. The range of legitimate things people can say is incredibly broad. All an abuser needs to do is find one message that the filter thinks is legitimate before starting an attack.

On these major platforms, the bad guys can create fake accounts. They can quickly see whether their content is being detected by testing it out. The cost of changing the particular words used is essentially zero, so all they need to do is try a bunch of things until one works before sending it out to millions of people.

It’s not that the technology isn’t good enough to catch a bunch of bad stuff. Rather, the abusers can wait until they find something that beats the filter before sending it. This means most of the abuse sent broadly avoids detection even if the filter catches most of the trial attempts. This approach is doomed to fail.

This may make the problem seem hopeless, but there is a simple approach that works well.

Identity is critical

Spam and most other online abuse is a business. A small number of people and organizations are responsible for a large portion of the abuse. It costs them nothing to change the words they use, but they do have other things that cost money.

When they build a website to link to from their spam emails, they need to pay to register the domain name. They need a computer to host the site. They need mail servers to send the email from.

These are all assets that cost money.

If a content filter starts detecting an attack, the attacker can just change the words—and it’s free.

If you detect the website being linked to or the server sending the email, the bad guys can switch them out for new ones—but it will cost money.

These entities that are stable across attacks are the key to stopping large-scale Internet abuse. This doesn’t even require being able to detect it before the first attack starts. Instead, it means being able to detect a campaign of abuse quickly after it starts.

Establishing identity is the most important tool in stopping abuse online. This is true for spam, and it’s also true for most of the other abuse problems.

Artificial intelligence is indeed stopping most spam. But it’s doing so by finding identities that are responsible for these attacks so that future content from them can be prevented.

Why is there still abuse?

If artificial intelligence works so well at identifying the bad guys, this raises an obvious question: why do we still have abuse?

When a tech platform identifies an abuser, it can cut off future activity from that account. But it can’t stop them from coming back the next day. Facebook reports taking down 6.7 billion fake accounts last year.

We can limit how much they use a given account, or server, or website. But there are some entities they can just create more of, like free accounts. It’s been common for years now for many of the spam emails getting through to be coming from free email accounts rather than sent directly from spammers’ computers. They can use various tools, like VPNs, to disguise their identity.

The core of the problem is that the identities being used don’t correspond directly to people. It’s not obvious who a spammer really is over the Internet, which makes it impossible to connect the many accounts involved in an attack together to the same person.

And tech platforms can only stop future attacks when they recognize the identity of the attacker. If those attackers can continually buy new websites and create new accounts, they can always continue their attacks.

While AI can make it uneconomical for most attackers, there is always a window before a new attack can be detected and the bad guys can monetize this. AI can lower the ROI, but can’t eliminate it.

Conclusion

AI is the most critical tool we currently have for fighting AI. Using AI to fight cybercrime can be very effective, but only if the problem is framed correctly. AI can detect identities responsible for abuse to enable future abuse from that same identity. This is the most successful approach yet developed. It can’t stop the abuse completely, but it can drive up their costs so that most abusers go out of business.